Model Context Protocol — Interaction of AI Models with external tools

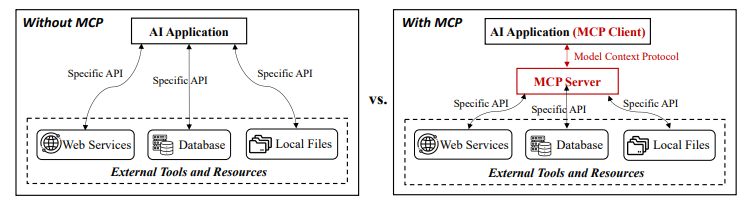

Now a days, we have been hearing a lot about this protocol in the AI world. MCP (Model Context Protocol) which was first introduced by Anthropic. To give a brief of what MCP protocol does is this is both server and client where MCP server is responsible for providing the integrations to the LLM with the external resources like databases, API calls etc., in the form of tools and the MCP client analyzes the user query and choose which tool I would like to interact with to get the information required for the user. There is a lot of interest currently on this topic because it simplifies the infrastructure enabling the LLM to be able to access multiple resources where as popular tools like LangChain and LlamaIndex provides interfaces to interact with the external services. But this integration is not part of LLM and developer are responsible for maintaining the integrations and handle the information provided to the LLM and handle the execution logic for each service. When the AI agents became popular which has the ability to execute the workflow, this integration where programmers has to explicitly tell what resources agents has to pick out of all the resources available to it and handle each one separately as the output of database is different from API call which can be JSON. This inhibits the AI agents from dynamically discovering and orchestrating the tools.

After the MCP introduced into the market by Anthropic, this provided the framework to dynamically communicate with the external tools. Instead of predefined tool mappings, MCP allows the AI agents to autonomously discover, select and orchestrate the tools based on task context. Since its release many major companies like OpenAI, Cloudfare, Cursor has integrated its LLM with the MCP server enabling the clients to interact with it.

MCP Architecture in simple terms

There are 3 major core components in MCP Architecture:

- MCP Host : The AI application that user interact with and provide the inputs to get the result.

- MCP Client: It is the client that manages communication between the host and one or more MCP servers. It is responsible for communicating to MCP server, queries the available functions and retrieve responses.

- MCP Server: This is the Server that is hosted at the LLM provider which has tools, resources and prompts.

According to the research paper, they have explained the MCP architecture very well and then came up with the lifecycle of the MCP Server as creation, operation and update. The paper also explains some of the security concerns at each stage of the MCP server.

They have also presented 3 use cases of the companies who has integrated this MCP server into their space. These are interesting and good to know

OpenAI : It has adopted MCP to standardize AI-to-tool communication. It has extended its Agent SDK with MCP support enabling developers to create AI agents that seamlessly interact with external tools. OpenAI’s plan to integrate MCP into the Responses API will streamline AI-to-tool communication, allowing AI models like ChatGPT to interact with tools dynamically without extra configuration. Additionally, OpenAI aims to extend MCP support to ChatGPT desktop applications, enabling AI assistants to handle various user tasks by connecting to remote MCP servers, further bridging the gap between AI models and external systems.

Cursor: With MCP, Cursor allows AI agents to interact with external APIs, access code repos and automate workflows directly within the IDE. When a developer issues a command within the IDE, the AI agent evaluates whether external tools are needed. If so, the agent sends a request to an MCP server, which identifies the appropriate tool and processes the task, such as running API tests, modifying files, or analyzing code.

Cloudfare: Cloudflare has played a pivotal role in transforming MCP from a local deployment model to a cloud-hosted architecture by introducing remote MCP server hosting. This approach eliminates the complexities associated with configuring MCP servers locally, allowing clients to connect to secure, cloud-hosted MCP servers seamlessly. The workflow begins with Cloudflare hosting MCP servers in secure cloud environments that are accessible via authenticated API calls. AI agents initiate requests to the Cloudflare MCP server using OAuth-based authentication, ensuring that only authorized entities can access the server.

Security Analysis

At a glance, here are the security concerns they see with the MCP server.

Creation Stage

Name Collision: Malicious entities can register MCP servers with similar names to legitimate ones, leading to impersonation attacks

Installer Spoofing: Attackers can distribute modified MCP server installers that introduce malicious code during installation

Code Injection/Backdoor: Malicious code can be embedded into the MCP server’s codebase, allowing attackers to maintain control over the server

Operation Stage

Tool Name Conflicts: Multiple tools with identical or similar names in the MCP server can lead to ambiguity and unintended tool invocation

Slash Command Overlap: Identical or similar commands defined by multiple tools can cause unintended actions

Sandbox Escape: Exploiting flaws in the sandbox implementation can allow attackers to gain unauthorized access to the host system

Update Stage

Privilege Persistence: Outdated or revoked privileges may remain active after an MCP server update, allowing unauthorized access

Vulnerable Versions: Lacks centralized platforms for auditing and enforcing security updates.

Configuration Drift: Unintended changes in system configuration over time can introduce exploitable gaps

Challenges that was presented

Centralized Security Oversight:

- Establish a centralized platform for auditing and enforcing security standards across MCP servers.

- Implement a unified package management system for MCP servers to ensure secure discovery and verification

Authentication and Authorization:

- Develop a standardized framework for managing authentication and authorization across MCP clients and servers.

- Implement robust authentication protocols and consistent access control policies

Debugging and Monitoring:

- Introduce comprehensive debugging and monitoring mechanisms to diagnose errors and assess system behavior during tool invocation

Consistency in Workflows:

- Implement effective state management and error recovery mechanisms to ensure consistent context across multi-step workflows

Scalability in Multi-Tenant Environments:

- Develop robust resource management and tenant-specific configuration policies to maintain performance and security in multi-tenant environments

Embedding MCP in Smart Environments:

- Ensure real-time responsiveness, interoperability, and security when integrating MCP into smart environments

Possible Solutions proposed

Namespace Policies and Cryptographic Verification:

- Establish strict namespace policies and implement cryptographic server verification to prevent name collision and installer spoofing

Secure Installation Framework:

- Develop a standardized, secure installation framework for MCP servers, enforcing package integrity checks

Advanced Validation Techniques:

- Implement advanced validation and anomaly detection techniques to identify and mitigate deceptive tool descriptions and command overlaps

Sandbox Security:

- Strengthen sandbox security by adopting rigorous code integrity verification, strict dependency management, and regular security audits

Automated Configuration Management:

- Automate configuration management and adopt infrastructure-as-code (IaC) practices to prevent configuration drift

By addressing these challenges and implementing the proposed solutions, we can enhance the security, scalability, and reliability of the MCP protocol, ensuring its sustainable growth and responsible development within the AI ecosystem.