Data Intensive System Design — How important is Reliability, Scalability and Maintainability

Many applications today deal with vast amount of data. Usually the application has to store the data, remember the intermediate data or search within huge data with some filters or periodically process chunks of data.

There are some key performance metrics how we measure the system that handle the data

Reliability

The system should continue to work correctly even in case of disaster. It should tolerate user mistakes and prevent unauthorized access. If the system achieves all these then it is called fault-tolerant or resilient system. Fault is deviating from its behavior but the failure is when whole system stops a service. It is important for the system designed to not make faults cause failures. We often encounter the faults like Hardware faults, Software errors and human errors.

Hardware faults — The main hardware issue is Hard disks crash or RAM becomes faulty or power grid has blackout or someone removed network cable which often happens in large data centers. To overcome we add redundancy to individual hardware components or set up RAID configurations and have dual power supplies or have hot swappable CPUs or have backup power. But this multi-machine redundancy was only required by a small number of application which is critical and needs high availability. A single server system can have planned downtimes whereas critical systems can be patched one node at a time without downtime of entire system.

Software errors — There might be some errors which can cause correlated faults across nodes like a software bug or runaway process that uses up shared resources like CPU, memory, disk space and network bandwidth. There is no quick solution to the problem of systematic faults in software. Monitoring the system can be a key in finding the faults early.

Human Errors — There can be human errors that can cause the faults. To avoid this we should design a system to minimize the opportunities for error, abstracted and decoupled which is tested thoroughly at all levels and allow easy recovery in case of failures with clean monitoring the error rates.

Reliability is an important factor where the bugs can lower the productivity and can cause huge loss to revenues. There might be situations where we might have to tradeoff the reliability to reduce the development cost or operational cost but it depends on use case and differs from each system design.

Scalability

The System should grow and handle high volume of traffic and and data. There is no guarentee that the system that worked today can work tomorrow. One reason can be as the load increases be it data or the requests to the service, the system should be able to handle large volumes of data.

What is load — We often describe the parameters such as requests per second or ratio of reads to writes or simultaneous active users or hit rate on a cache as load.

Performance — When we increase the load, the system resources if unchanged can hinder the performance but how do we calculate how much resources increase is required for the load. For this we need some numbers like say batch processing system it is throughput which is number of records per second or time taken to run a job on dataset of some size. But for a service we usually calculate the response time between client sending a request and receiving a response. Sometimes the latency is also used which is duration that a request is waiting to be handled.

We usually take the distribution of the response times as there might be outliers while handling some requests. So it is always preferred to take average of the metrics which might not be mean as it is affected by outliers, we can consider the median which is the mid point which is the percentile. Usually we call it as p90, p95 and p999. If 95th percentile response time is 1.5 seconds, that means 95 out of 100 requests take less than 1.5 seconds and 5 out of 100 requests take 1.5 seconds or more. High percentiles of response times also known as tail latencies. These percentiles are often used in Service Level Objectives and Service Level Agreements which are contracts that define expected performance and availability of the service.

The client may be waiting for a small number of slow requests to hold up the processing of subsequent requests an effect sometimes known as head-of-line blocking.

Approaches for copying with load — An architecture that supports one level may not fit the 10 times the load. If we have fast growing service then we might have to increase the resources. We might have to use vertical scaling which is increasing the resources like CPU and memory within the same machine or horizontal scaling which is adding multiple machines so that the load is distributed known as shared nothing architecture. Balancing both might be needed. Ususally to add machines we often called elastic which automatically scales up and is useful when the load is unpredictable. This is good for stateless applications. If it is stateful data systems replicating the data from single node to distributed setup is often complex.

An architecture should have right mixture of scaling so that the performance of the system can be optimal.

Maintainability

The system should maintain current behavior and adapt the system to new use cases. The cost of maintenance of a system is usually high compared to developing a new system. This includes fixing bugs, keeping it operational and investigating failures and adapting to new environments and maintaining the system free from technical debts. There are 3 design principles for software systems

Operability — Making it easy to run smoothly which includes monitoring health of system, restoring bad service, tracking down the problems, keeping the security patches and software updated, proactively solving issues, keeping environment stable by maintaining good visibility and support of the internals of the system and avoiding dependency on single systems, good documentations and self healing when appropriate and minimizing surprises.

Simplicity — As the projects gets larger it becomes complex and difficult to understand. This increases the cost of maintenance mainly due to tight coupling of modules, tangled dependencies, and many more. This increases the risk of introducing the bugs. One of the best solution is abstraction which keeps the code clean and simple to understand.

Evolvability — There can be changes in system’s requirements or can be revised business priorities or may be legal or regulatory changes. The system should be adaptable to the changes. One of the nice organizational process is Agile working patterns which is centered around the increasing the frequency of changes and using frameworks like Test Driven Development and refactoring it is very adaptable for any organization.

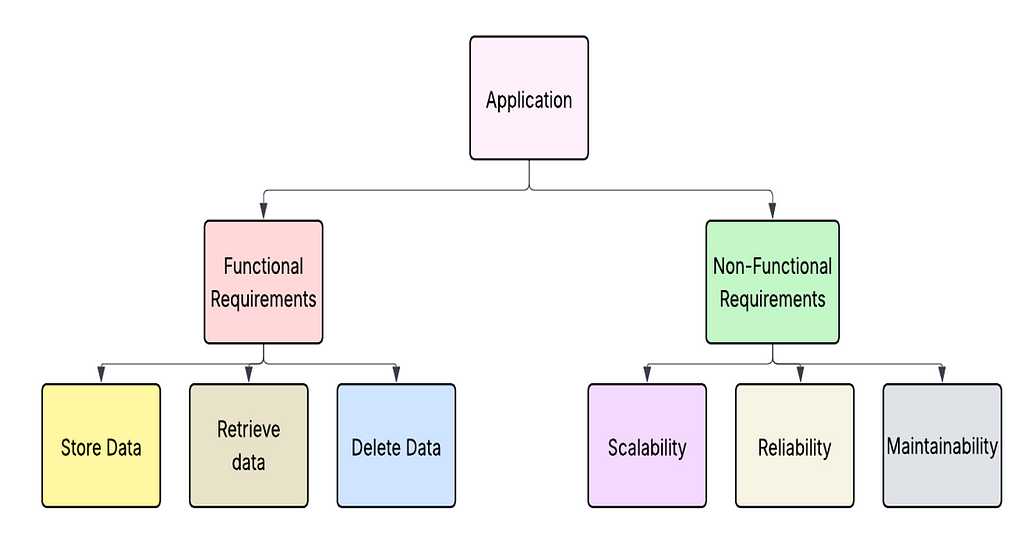

To summarize, an application can have functional and non functional requirements. Both are key for any application. Reliability, Scalability and Maintainability discussed in this article is some of the non functional requirements that every architect and developer should keep in their mind.