This week I was trying to explore and read some of the research papers that got rapid changes to the AI field. Here are some knowledge bites that every AI enthusiast must know:

Ever wondered how we got from basic computers to ChatGPT, self-driving cars, and AI that can create art? It all started with brilliant researchers publishing papers that changed everything.

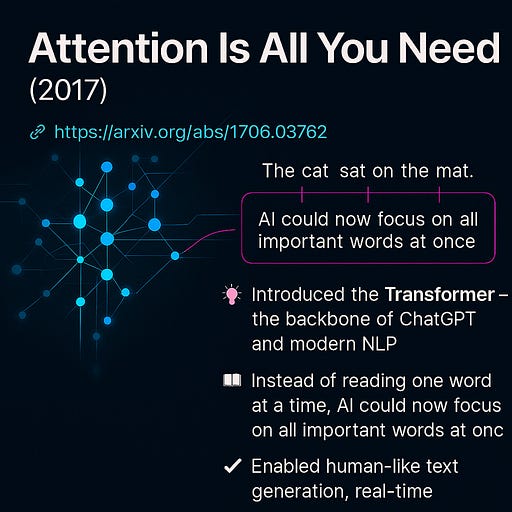

“Attention Is All You Need” (2017) Created a new way for computers to understand language

Link: https://arxiv.org/abs/1706.03762

Think of this like teaching a computer to read a sentence by paying attention to all the important words at once, instead of reading word by word. This breakthrough made it possible for AI to understand and generate human-like text.

This single paper made ChatGPT, Google Translate, and all modern language AI possible. Without it, we wouldn’t have the AI assistants we use today.

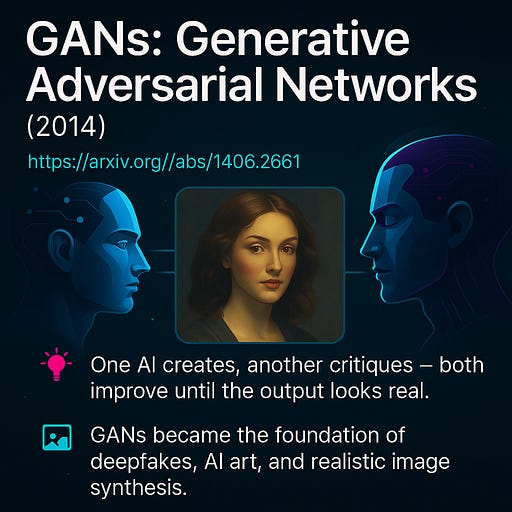

“Generative Adversarial Networks” (2014)

Created AI that can make fake but realistic content.

Link:https://arxiv.org/abs/1406.2661

Imagine two AIs competing: one tries to create fake photos, the other tries to spot the fakes. They keep getting better at their jobs until the fake photos look completely real.

This created the foundation for AI that generates images, videos, and even synthetic voices. It’s behind many creative AI tools we see today.

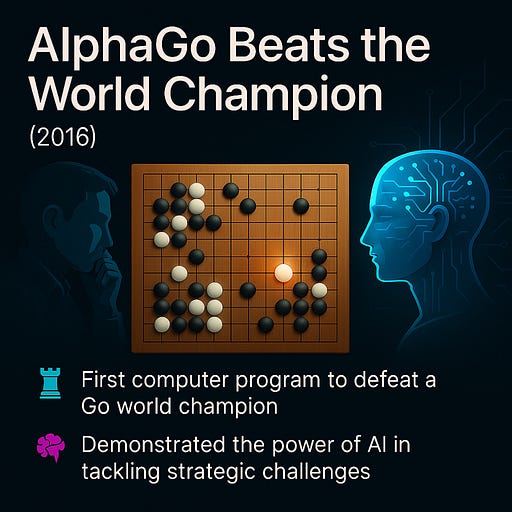

“AlphaGo: AI Beats World Champion” (2016)

Created AI that mastered the ancient game of Go.

Link:https://www.nature.com/articles/nature16961

Go is incredibly complex — more possible moves than atoms in the universe. When AlphaGo beat the world champion, it shocked everyone because Go requires intuition and creativity.

This moment made the whole world realize AI could do things we thought only humans could do. It sparked massive investment and interest in AI research.

“Deep Learning for Image Recognition” (2015)

Solved the problem of teaching computers to see deeply.

Link:https://arxiv.org/abs/1512.03385

Before this, computer vision was like trying to see through foggy glasses. These researchers found a way to make AI’s “vision” crystal clear by building much deeper neural networks.

This breakthrough powers everything from medical scans that detect cancer to the cameras in your phone that recognize faces.

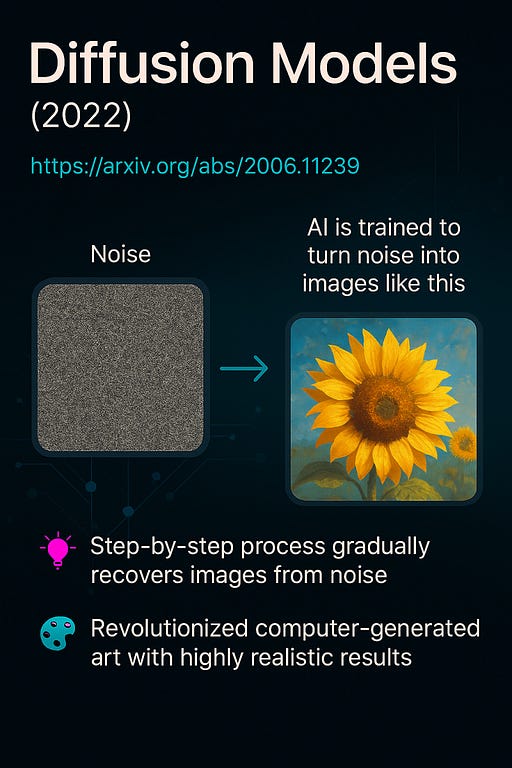

“AI That Creates Images from Noise” (2020)

Perfected a way for AI to create pictures by removing noise.

Link:https://arxiv.org/abs/2006.11239

Think of it like an AI that starts with static on a TV screen and gradually turns it into a beautiful painting by removing the noise bit by bit.

This technique powers AI art generators like DALL-E and Midjourney that can create any image you describe in words.

“BERT: Teaching AI to Read Better” (2018)

Created an AI that understands context in language.

Link:https://arxiv.org/abs/1810.04805

BERT reads text like humans do — understanding that the same word can mean different things in different sentences. It’s like the difference between a smart reader and someone just looking up words in a dictionary.

BERT made search engines smarter and helped create better chatbots and translation tools that actually understand what you mean.

“AI That Learns Games by Playing” (2013)

Created AI that learns by trial and error, just like humans.

Link:https://arxiv.org/abs/1312.5602

These researchers taught AI to play video games without giving it any instructions — it just played millions of games and got better each time.

This approach led to AI that can drive cars, control robots, and master complex games like Go and chess. It showed AI could learn skills we never explicitly taught it.

“U-Net: AI for Medical Images” (2015)

Created AI that can identify and outline objects in images.

Link:https://arxiv.org/abs/1505.04597

Originally designed to help doctors analyze medical scans, this AI can look at any image and precisely identify what’s what — like circling every tumor in an X-ray.

This technology now helps doctors diagnose diseases faster and more accurately. It also became crucial for modern AI art generators.

“Teaching AI to Pay Attention” (2014)

Gave AI the ability to focus on important parts while ignoring the rest.

Link:https://arxiv.org/abs/1409.0473

Like teaching someone to focus on the most important words in a long email, this research taught AI to pay attention to what matters most.

This “attention” concept became the foundation for almost all modern AI. It’s in your Google Translate, Siri, and every advanced AI system today.

These papers didn’t just make small improvements — they completely changed what was possible. Each one opened doors to new technologies that seemed like science fiction just years before.

Learning is fun!! Never stop exploring!!

#AI #Technology #Innovation #Research #ArtificialIntelligence #SimpleTech #TechExplained