Integrated MCP into My RAG Project — Now My AI Assistant Can Talk to Any LLM Seamlessly! 🤖📚

😃 𝗧𝗵𝗶𝘀 𝘄𝗲𝗲𝗸, 𝗜’𝗺 𝗲𝘅𝗰𝗶𝘁𝗲𝗱 𝘁𝗼 𝘀𝗵𝗮𝗿𝗲 𝘀𝗼𝗺𝗲𝘁𝗵𝗶𝗻𝗴 𝗜 𝗲𝘅𝗽𝗹𝗼𝗿𝗲𝗱 𝗶𝗻 𝗺𝘆 𝗔𝗜 𝗷𝗼𝘂𝗿𝗻𝗲𝘆 — 𝗶𝗻𝘁𝗲𝗴𝗿𝗮𝘁𝗶𝗻𝗴 𝗠𝗖𝗣 𝗶𝗻𝘁𝗼 𝗺𝘆 𝗥𝗔𝗚 𝗽𝗿𝗼𝗷𝗲𝗰𝘁 — 𝙄𝙣𝙩𝙧𝙤𝙙𝙪𝙘𝙞𝙣𝙜 𝙎𝙝𝙞𝙡𝙥𝙖’𝙨 𝙇𝙚𝙖𝙧𝙣𝙞𝙣𝙜 𝘼𝙨𝙨𝙞𝙨𝙩𝙖𝙣t!

You may have recently come across the term MCP — Model Context Protocol, popularized by Anthropic. I took the initiative to enhance my Retrieval-Augmented Generation (RAG) project using this protocol — and here’s why it matters. 👇

𝗪𝗵𝗮𝘁 𝗶𝘀 𝗠𝗖𝗣 𝗶𝗻 𝘀𝗶𝗺𝗽𝗹𝗲 𝘁𝗲𝗿𝗺𝘀?

Let’s take my project as an example. It has two core functionalities:

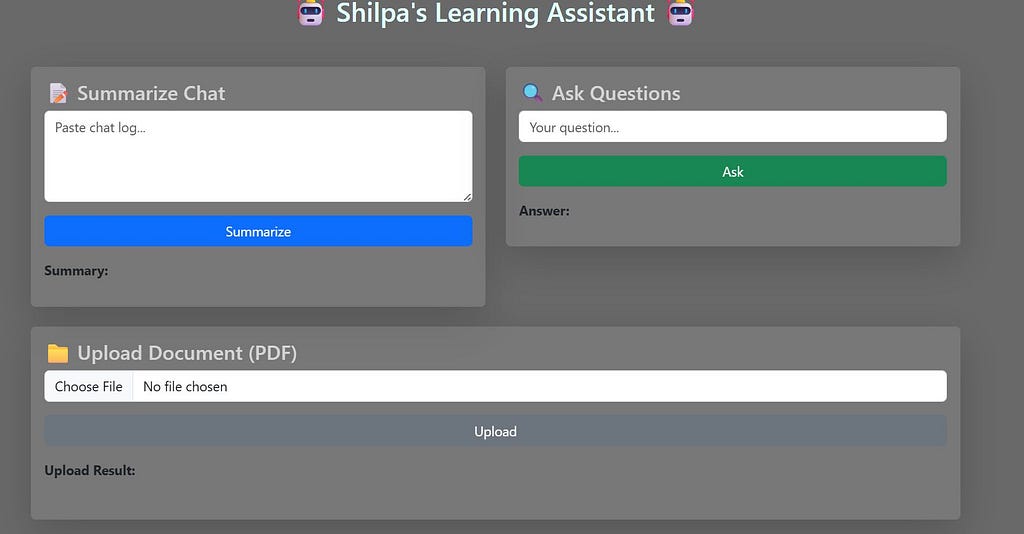

• 𝘚𝘶𝘮𝘮𝘢𝘳𝘪𝘻𝘢𝘵𝘪𝘰𝘯 𝘰𝘧 𝘤𝘩𝘢𝘵 𝘤𝘰𝘯𝘵𝘦𝘯𝘵

• 𝘘𝘶𝘦𝘴𝘵𝘪𝘰𝘯 𝘈𝘯𝘴𝘸𝘦𝘳𝘪𝘯𝘨 𝘧𝘳𝘰𝘮 𝘢 𝘱𝘦𝘳𝘴𝘰𝘯𝘢𝘭 𝘬𝘯𝘰𝘸𝘭𝘦𝘥𝘨𝘦 𝘳𝘦𝘱𝘰𝘴𝘪𝘵𝘰𝘳𝘺 (𝘮𝘶𝘭𝘵𝘪𝘱𝘭𝘦 𝘥𝘰𝘤𝘶𝘮𝘦𝘯𝘵𝘴)

While both use LLMs under the hood, they differ in how they retrieve and process data:

1. Summarization processes all relevant nodes (vector embeddings) and generates a concise summary.

2. QA retrieves only the top relevant nodes before generating an answer.

Now, here’s the twist: 𝘐 𝘮𝘪𝘨𝘩𝘵 𝘶𝘴𝘦 𝘥𝘪𝘧𝘧𝘦𝘳𝘦𝘯𝘵 𝘓𝘓𝘔𝘴 𝘧𝘰𝘳 𝘦𝘢𝘤𝘩 — 𝘴𝘢𝘺, 𝘊𝘭𝘢𝘶𝘥𝘦 𝘧𝘰𝘳 𝘴𝘶𝘮𝘮𝘢𝘳𝘪𝘻𝘢𝘵𝘪𝘰𝘯 𝘢𝘯𝘥 𝘝𝘦𝘳𝘵𝘦𝘹 𝘈𝘐 𝘧𝘰𝘳 𝘲𝘶𝘦𝘳𝘺𝘪𝘯𝘨. But each LLM often has a different API interface, making it hard to scale or swap models easily.

𝗧𝗵𝗶𝘀 𝗶𝘀 𝘄𝗵𝗲𝗿𝗲 𝗠𝗖𝗣 𝘀𝗵𝗶𝗻𝗲𝘀

• MCP (Model Context Protocol) provides a standardized interface for clients and servers to interact with any LLM, regardless of vendor.

• The MCP Server (typically near or within the LLM stack) understands the context.type and routes requests appropriately.

• The MCP Client (your app, chatbot, etc.) formats and sends requests using a common schema, no matter which LLM it talks to.

𝗪𝗵𝘆 𝗶𝘀 𝘁𝗵𝗶𝘀 𝘂𝘀𝗲𝗳𝘂𝗹?

💡 AI is evolving fast — Hugging Face alone has over 1.6 million models today. With MCP, I can swap LLMs without rewriting my app logic.

If I host my own LLM, I just need to expose it via an MCP-compliant server. The client (like my chatbot UI) then sends summarization, query, or upload requests using the same context structure — simple, scalable, and future-ready.

𝗪𝗵𝗮𝘁 𝗜 𝗯𝘂𝗶𝗹𝘁

In my previous post, I shared how my RAG framework could extract insights from research papers.

Now, I’ve made it more robust and modular by implementing:

✅ A custom MCP Server that handles both summarization and document QA

✅ A local MCP Client that powers a chatbot UI with standardized communication

Here’s a snapshot of how my chatbot UI on my local setup!

🛠️ GitHub: https://lnkd.in/gxmEW2G9

🌟 Super excited to keep learning and building. If you’re working with RAG or LLM ops, I highly recommend exploring MCP!