AWS Series — S3 Technical Essentials

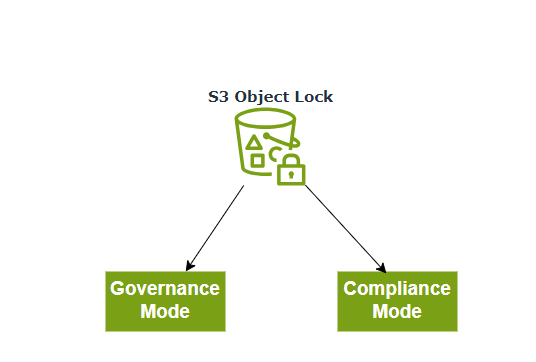

What is S3 Object Lock?

You can use S3 Object Lock to store objects using a write once, read many (WORM) model. It can help prevent objects from being deleted or modified for a fixed amount of time or indefinitely.

You can use S3 Object Lock to meet regulatory requirements that require WORM storage, or add an extra layer of protection against object changes and deletion.

We have 2 different modes of S3 Object lock

Governance Mode

In Governance mode, users can’t overwrite or delete an object version or alter its lock settings unless they have special permissions

With governance mode, you protect objects against being deleted by most users, but you can still grant some users permission to alter the retention settings or delete the object if necessary.

Compliance Mode

In compliance mode, a protected object version cannot be overwritten or deleted by any user, including the root user in your AWS account. When an object is locked in compliance mode, its retention mode can’t be changed and its retention period can’t be shortened.

Compliance mode ensures an object version can’t be overwritten or deleted for the duration of the retention period.

Retention Periods

A retention period protects an object version for a fixed amount of time. When you place a retention period on an object version, Amazon S3 stores a timestamp in the object version’s metadata to indicate when the retention period expires.

After the retention period expires, the object versions can be overwritten or deleted unless you also placed a legal hold on the object version.

Legal Hold

S3 Object lock also enabled you to place a legal hold on an object version. Like a retention period, a legal hold prevents an object version from being overwritten or deleted. However, a legal hold doesn’t have an associated retention period and remains in effect until removed. Legal holds can be freely placed and removed by any user who has the s3:PutObjectLegalHold permission.

What is Glacier Vault Lock?

S3 Glacier Vault Lock allows you to easily deploy and enforce compliance controls for individual S3 Glacier vaults with a vault lock policy. You can specify controls, such as WORM, in a vault lock policy and lock the policy from future edits. Once locked, the policy can no longer be changed.

Points to Remember for S3 and Glacier Object Lock

- Use S3 Object Lock to store objects using a write once, read many (WORM) model

- Object Lock can be on individual objects or applied across the bucket as a whole.

- Object Lock comes in two modes: governance mode and compliance mode.

- With Compliance mode, a protected object version cannot be overwritten or deleted by any user, including the root user in your AWS account.

- With Governance mode, users cannot overwrite or delete an object version or alter its lock settings unless they have special permissions.

- S3 Glacier Vault Lock allows you to easily deploy and enforce compliance controls for individual S3 Glacier vaults with a vault lock policy.

- You can specify controls, such as WORM, in a vault lock policy and lock the policy from future edits. Once locked, the policy can no longer be changed.

S3 Object Encryption

1. Encryption in Transit

- SSL/TLS

- HTTPS

2. Encryption at Rest : Server-Side Encryption

- SSE-S3: S3 Managed Keys using AES 256 bit encryption

- SSE-KMS: AWS Key management service managed keys

- SSE-C: Customer provided keys

3. Encryption at Rest : Client -Side Encryption

- You can encrypt the files yourself before you upload them to S3

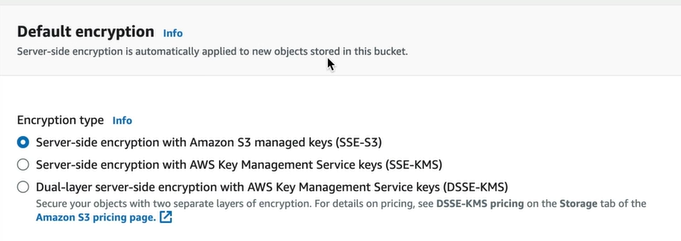

Server-Side Encryption

- Enabled by default

- All Amazon S3 buckets have encryption configured by default

- All objects are automatically encrypted by using server-side encryption with Amazon S3 managed keys (SSE-S3)

- This encryption setting applies to all objects in the bucket by default

Enforcing Server- Side Encryption

There are Two options

- x-amz-server-side-encryption — If the file is to be encrypted at upload time, the x-amz-server-side-encryption parameter will be included in the request header

- Two Options —

x-amz-server-side-encryption: AES256 (SSE S3 — S3 Managed Keys)

x-amz-server-side-encryption: aws:kms (SSE- KMS — KMS-managed keys)

- PUT Request Header — When this parameter is included in the header of the PUT request, it tells S3 to encrypt the object at the time of upload, using the specified encryption method.

The S3 PUT Request header tells S3 to encrypt the file using SSE-S3 ( AES-256-bit) at the time of upload.

You can create a bucket policy that denies any S3 PUT request that doesn’t include the x-amz-server-side-encryption parameter in the request header.

Go to AWS Management Console > S3 > Create Bucket

In the default settings, we have default encryption which is enabled already

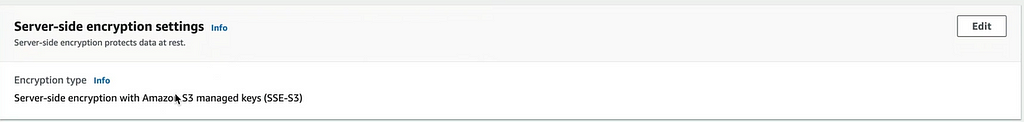

If you upload any object and go to the Properties of the object

You can find it is enabled.

Optimizing S3 Performance

Info about S3 Prefixes — When we create bucket, we have bucket name and then we have some folders and subfolders. The Prefix is nothing but the folder and subfolder path.

For Example, bucket-name/folder1/subfolder1 → /folder1/subfolder1

In the above case, the /folder1/subfolder1 is the prefix

Prefix is nothing but the folders without the type of file included with in the bucket

How does the prefixes influence the S3 performance

S3 has extremely low latency. You can get the first byte out of S3 within 100–200 milliseconds. You can also achieve a high number of requests: 3500 PUT/POST/DELETE and 5500 GET/HEAD requests per second per prefix.

- You can get better performance by spreading your reads across different prefixes. For example, if you are using 2 prefixes you can achieve 11,000 requests per second.

- If we used all 4 prefixes, you would achieve 22,000 requests per second.

The more prefixes you use, the better is the performance of S3 buckets

S3 limitations when using KMS

- If you are using SSE-KMS to encrypt your objects in S3, you must keep in mind the KMS limits.

- When you upload a file, you will call GenerateDataKey in the KMS API

- When you download a file, you will call Decrypt in the KMS API

KMS Request Rates —

- Uploading/downloading will count toward the KMS quota

- Currently, you cannot request a quota increase for KMS

- Region-specific. However, its either 5500,10000 or 30000 requests per second.

So It is better to use Default encryption rather than KMS to encrypt the objects which would give better performance.

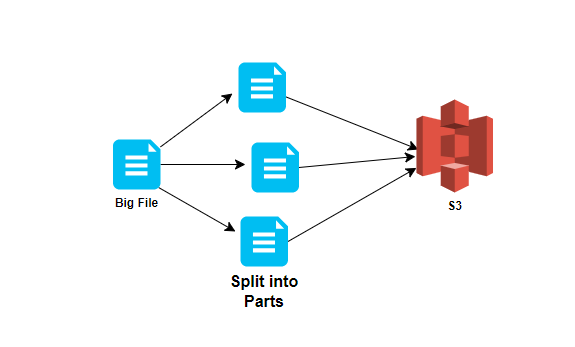

Uploads

Multipart Uploads —

- Recommended for files over 100 MB

- Required for files over 5 GB

- Parallelize uploads (increases efficiency)

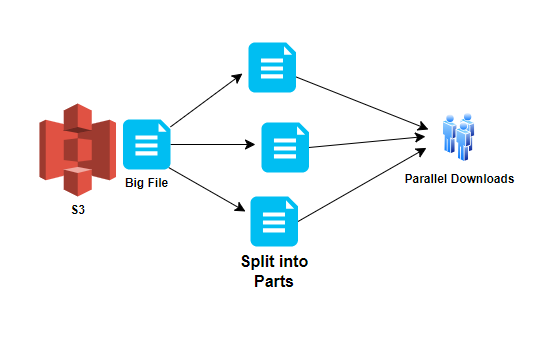

S3 Byte Range Fetches for Downloads —

- Parallelize downloads by specifying byte ranges

- If there are failures in the download, it’s only for a specific byte range

- Can be used to speed up downloads

- Can be used to download partial amounts of the file( e.g., header information

Points to remember about S3 Performance Optimization—

- bucket-name/folder1/subfolder1 → /folder1/subfolder1

- You can also achieve a high number of requests: 3500 PUT/POST/DELETE and 5500 GET/HEAD requests per second per prefix.

- You can get better performance by spreading your reads across different prefixes. For example, if you are using 2 prefixes you can achieve 11,000 requests per second.

- If you are using SSE-KMS to encrypt your objects in S3, you must keep in mind the KMS limits.

- Uploading/downloading will count toward the KMS quota

- Currently, you cannot request a quota increase for KMS

- Region-specific. However, its either 5500,10000 or 30000 requests per second.

- Use multipart uploads to increase performance when uploading files to S3.

- Should be used for any files over 100 MB and must be used for any file over 5 GB.

- Use S3 byte-range fetches to increase performance when downloading files to S3.

Backing up data with S3 Replication

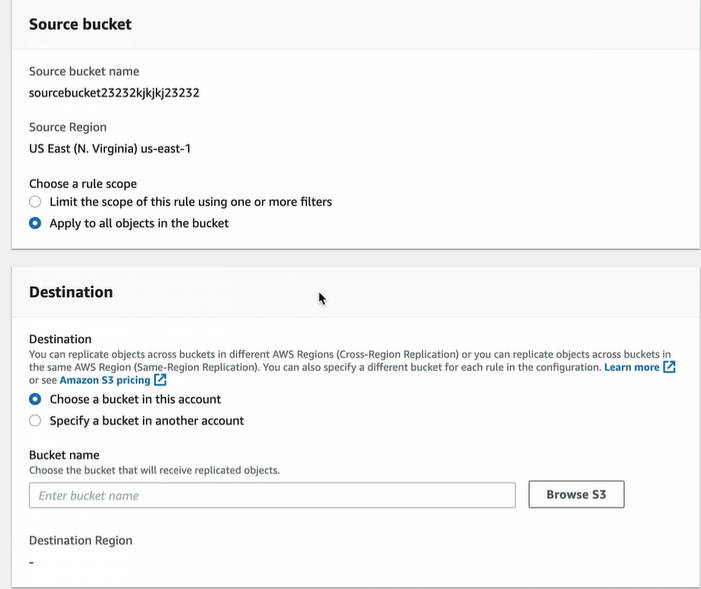

- You can replicate objects from one bucket to another — Versioning must be enabled on both the source and destination buckets

- Objects in an existing bucket are not replicated automatically — Once replication is turned on, all subsequent updated objects will be replicated automatically.

- Delete markers are not replicated by default — Deleting individual versions or delete markers will not be replicated

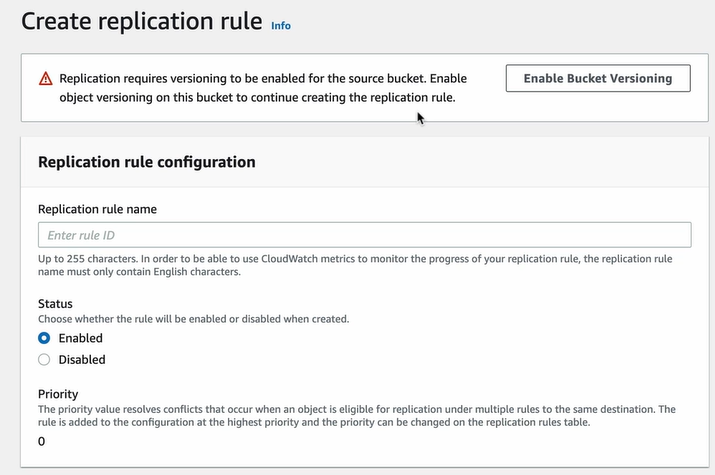

Open AWS Management console > S3 > Create 2 buckets one source and other is destination in 2 different regions > Upload a file into source bucket

Go to Source bucket > Management > Replication rules > Create Replication rule

The Bucket Versioning should be enabled. So click on it.

Give the replication name and select the source and destination bucket

Enable the bucket versioning for destination bucket also. Keep the IAM roles and everything as default and create.

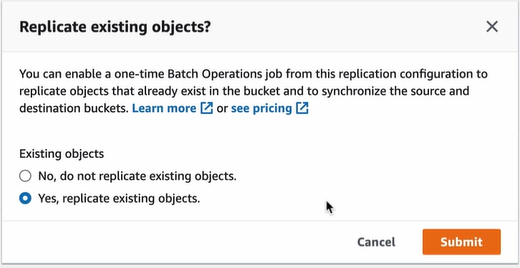

This will ask you for confirmation that you should enable the replication for existing objects or not

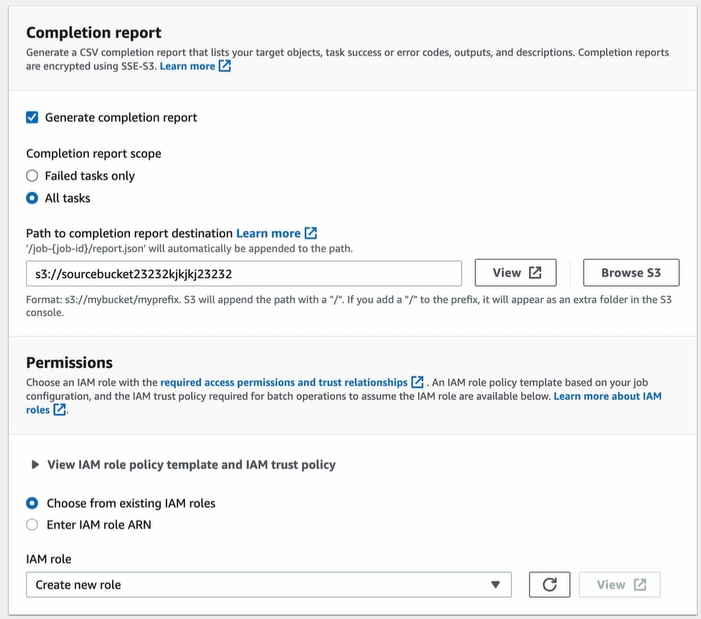

Choose the path for report as source bucket and leave permissions then save this.

Go to the destination bucket we can see the objects from source bucket.

These are some of the S3 essentials required for the good knowledge on usage and is used day to day.

Happy Learning!!